Understanding HNSW in ChromaDB: The Engine Behind High-Performance Vector Search

As Retrieval-Augmented Generation (RAG) becomes a core architectural pattern for modern AI applications, the efficiency of vector search has never been more critical. Developers rely on vector databases not only to store high-dimensional embeddings but also to retrieve relevant information at low latency and high accuracy—especially when working at scale. ChromaDB, one of the most widely used open-source vector databases, achieves this performance through Hierarchical Navigable Small World (HNSW) graphs, a breakthrough data structure for approximate nearest-neighbor (ANN) search.

HNSW is an ANN algorithm that organizes vectors into a multi-layer graph structure. The upper layers form a sparse network that allows long-range “jumps” across embedding space, while the lower layers gradually increase in density and local connectivity. This hierarchical architecture enables the search algorithm to quickly traverse from coarse-grained layers to the fine-grained, densely connected bottom level—ultimately delivering near-logarithmic query complexity. Instead of scanning through millions of embeddings, the system efficiently navigates the graph to locate the nearest neighbors with high recall. This balance of speed and accuracy makes HNSW an ideal fit for latency-sensitive RAG applications, conversational agents, semantic search systems, and any workload relying on rapid vector similarity lookups.

A key aspect of HNSW’s flexibility in ChromaDB lies in the space parameter, which determines the distance function used throughout the index. ChromaDB natively supports several space types, including "cosine" (for directional similarity), "l2" (Euclidean distance), and "ip" (inner product). This choice directly influences retrieval behavior: cosine distance is ideal for normalized embeddings from large language models or sentence transformers; Euclidean distance is a natural fit for geometric embedding spaces; and inner product works well when maximizing alignment between vectors. Because HNSW operates directly within the chosen metric, ChromaDB can adapt to a wide range of embedding models without requiring custom indexing logic or post-processing steps. The result is a vector search engine that aligns closely with the mathematical properties of the underlying embeddings.

Beyond distance metrics, HNSW in ChromaDB exposes configurable parameters such as M (controlling the number of bi-directional links per node), ef_construction (defining graph search depth during index building), and ef (controlling search breadth at query time). These knobs give developers fine-tuned control over the accuracy-performance tradeoff. Higher values increase recall and precision but require more compute resources; lower values favor faster throughput. Because HNSW supports incremental insertion, new vectors can be added without rebuilding the index, making it well suited for dynamic workflows like real-time document ingestion or continuous model updates.

ChromaDB’s integration of HNSW extends beyond raw vector search. It pairs seamlessly with metadata and document-level filters, allowing developers to combine similarity search with structured constraints—such as filtering by category, timestamp, source type, or any custom attributes. In addition, the database’s flexible persistence options and client libraries make it easy to integrate HNSW-powered retrieval inside RAG pipelines, agent architectures, or operational ML workflows. Whether serving as an embedded engine within a Python application or deployed as a distributed service, ChromaDB maintains HNSW’s performance characteristics even as collections scale into millions of entries.

As organizations increasingly leverage RAG to ground LLMs in proprietary knowledge, retrieval speed and accuracy are becoming competitive differentiators. HNSW provides the computational backbone necessary to meet those demands, enabling ChromaDB to deliver fast, flexible, and high-recall vector search at scale. For engineers looking to build high-performance AI systems—from enterprise knowledge assistants to augmented analytics—understanding HNSW is key to unlocking ChromaDB’s full potential.

LangChain vs. LlamaIndex in RAG context

LangChain and LlamaIndex are two of the most widely used frameworks for building Retrieval-Augmented Generation (RAG) systems, but they serve different roles within the pipeline. LangChain provides a comprehensive toolkit for orchestrating the entire RAG workflow—retrieval, prompt construction, tool integrations, agents, and post-processing—while LlamaIndex focuses more deeply on data ingestion, indexing, and retrieval quality. In a typical RAG setup, LangChain functions as the “application orchestrator,” whereas LlamaIndex serves as the “data engine” responsible for building a highly optimized knowledge base.

In the data preparation and indexing stage, LlamaIndex offers advanced features for chunking, metadata extraction, document hierarchies, and hybrid or graph-based index structures. This makes it exceptionally strong when the quality of retrieved information depends on how the knowledge base is constructed. LangChain also supports document loading and embedding, but LlamaIndex is built specifically to give developers fine-grained control over how data is transformed into vector indexes. These indexing-centric capabilities make LlamaIndex especially effective for improving RAG retrieval relevance and precision.

When orchestrating the live retrieval and generation process, LangChain provides greater flexibility and modularity. It excels at building multi-step chains, coordinating multiple retrievers, calling external tools or APIs, routing queries, and composing different prompts. This makes LangChain a strong choice for complex RAG applications that require logic flows, evaluation loops, or agent-style reasoning. While LlamaIndex also supports retrieval pipelines and query engines, its primary focus is ensuring that the data is structured and accessible rather than orchestrating multi-step decision workflows.

Both frameworks integrate seamlessly with modern vector databases, including ChromaDB. ChromaDB is a popular choice for storing embeddings due to its open-source nature, high performance, and flexible metadata filtering. In LangChain, ChromaDB can be plugged in with just a few lines of code as a VectorStore, allowing LangChain chains and agents to retrieve relevant documents efficiently. LlamaIndex also supports ChromaDB as a storage backend, enabling developers to use LlamaIndex’s powerful indexing and query abstractions on top of the same vector database. This means teams can use ChromaDB as a shared, persistent vector layer regardless of whether the orchestration is done through LangChain, LlamaIndex, or a combination of both.

In production RAG deployments, LangChain and LlamaIndex often work side-by-side, and ChromaDB acts as a reliable vector storage layer for both. LlamaIndex can handle the data ingestion, embedding, and index construction, storing vectors inside ChromaDB. LangChain can then use that same ChromaDB instance to retrieve relevant chunks during runtime, build prompts, and drive multi-step reasoning flows. The result is a flexible, scalable, and high-quality RAG system: LlamaIndex optimizes the data and indexing layer, LangChain manages orchestration and logic, and ChromaDB provides a shared high-speed vector store that both can rely on.

LangChain Expression Language (LCEL)

LangChain Expression Language (LCEL) is a declarative way to build LLM-powered pipelines using simple, chainable components. Instead of writing complex procedural code, LCEL lets developers express a workflow—such as prompting, model invocation, parsing, and post-processing—using a clean, readable syntax. At its core, LCEL revolves around runnables, composable units that each perform a step in the pipeline. These runnables can be linked together using the pipe operator (|), making it easy to construct end-to-end flows that transform inputs into model-ready prompts, generate outputs, and parse results into structured formats.

One of LCEL’s biggest strengths is its flexibility. It allows developers to combine prompts, models, retrievers, tools, and custom Python functions into modular chains that can be reused and extended. Because LCEL is built around standard interfaces, the same chain can run in different environments—locally, in the cloud, or inside async contexts—without code changes. This consistency makes LCEL especially powerful for production RAG systems, agent workflows, and applications requiring reproducible, maintainable LLM logic.

FAISS and comparison vs Chroma

FAISS (Facebook AI Similarity Search) is an open-source library developed by Meta AI for performing fast, scalable similarity search and dense vector indexing. In simpler terms, FAISS helps you efficiently search through very large collections of numerical vector embeddings—such as those produced by language models, image models, recommendation engines, or other machine-learning systems. Traditional databases struggle with high-dimensional vector search because computing distances between millions or billions of vectors is computationally expensive. FAISS solves this by providing highly optimized indexing structures, GPU acceleration, and quantization techniques that dramatically speed up nearest-neighbor search, even at massive scale.

Under the hood, FAISS supports several index types—from brute-force exact search (IndexFlat) to more advanced approximate nearest-neighbor (ANN) methods like IVF (Inverted File Lists), PQ (Product Quantization), and HNSW (Hierarchical Navigable Small Worlds). These structures reduce the amount of computation needed by clustering, compressing, or graph-structuring the vectors. FAISS can scale from thousands to billions of embeddings and can run on both CPUs and GPUs (with GPU support being one of its biggest performance advantages). Because of its speed and flexibility, FAISS is widely used in Retrieval-Augmented Generation (RAG), semantic search engines, recommendation systems, and large-scale ML pipelines where vector similarity is the core operation.

✅ FAISS vs. Chroma – Comparison Table

Overview

• FAISS: A high-performance vector similarity library built by Meta AI, designed for large-scale, high-throughput search.

• Chroma: A user-friendly, developer-oriented vector database with built-in management, metadata, and retrieval features.

Primary Purpose

• FAISS: Optimized vector search library for very large datasets and fast similarity search

• Chroma: Full vector database with metadata, collections, and management features

Scalability

• FAISS: Extremely high — optimized C++/CUDA — best for millions+ vectors

• Chroma: High, but more limited on a single node; scalable with external orchestration

Performance

• FAISS: Best-in-class for speed and throughput

• Chroma: Fast enough for most apps; not as optimized as FAISS internally

Ease of Use

• FAISS: Low; requires more engineering knowledge

• Chroma: Very high; Python-native, beginner-friendly API

Index Types Supported

• FAISS: Many — IVF, HNSW, Flat, PQ, OPQ, GPU acceleration

• Chroma: Mostly HNSW-based; simpler but fewer options

Metadata Support

• FAISS: None built-in

• Chroma: Native metadata storage and filtering

Persistence

• FAISS: Manual — store/load index files yourself

• Chroma: Built-in persistence and data management

Best For

• FAISS: High-scale, performance-critical RAG; embeddings >100M

• Chroma: Rapid prototyping, small-to-mid production RAG apps

Security Considerations

• FAISS: No built-in auth, RBAC, encryption — must layer externally

• Chroma: Provides basic auth/ACLs in managed environments

Cloud-Native Features

• FAISS: None — DIY orchestration and scaling

• Chroma: Yes — especially in managed Chroma Cloud

Maturity and Ecosystem

• FAISS: Very mature, widely benchmarked

• Chroma: Newer but rapidly growing ecosystem

Summary

FAISS is the right choice when you need maximum performance, GPU acceleration, and custom ML pipeline integration.

Chroma is the right choice when you want simplicity, native metadata, and plug-and-play RAG.

Mixture of Experts (MoE)

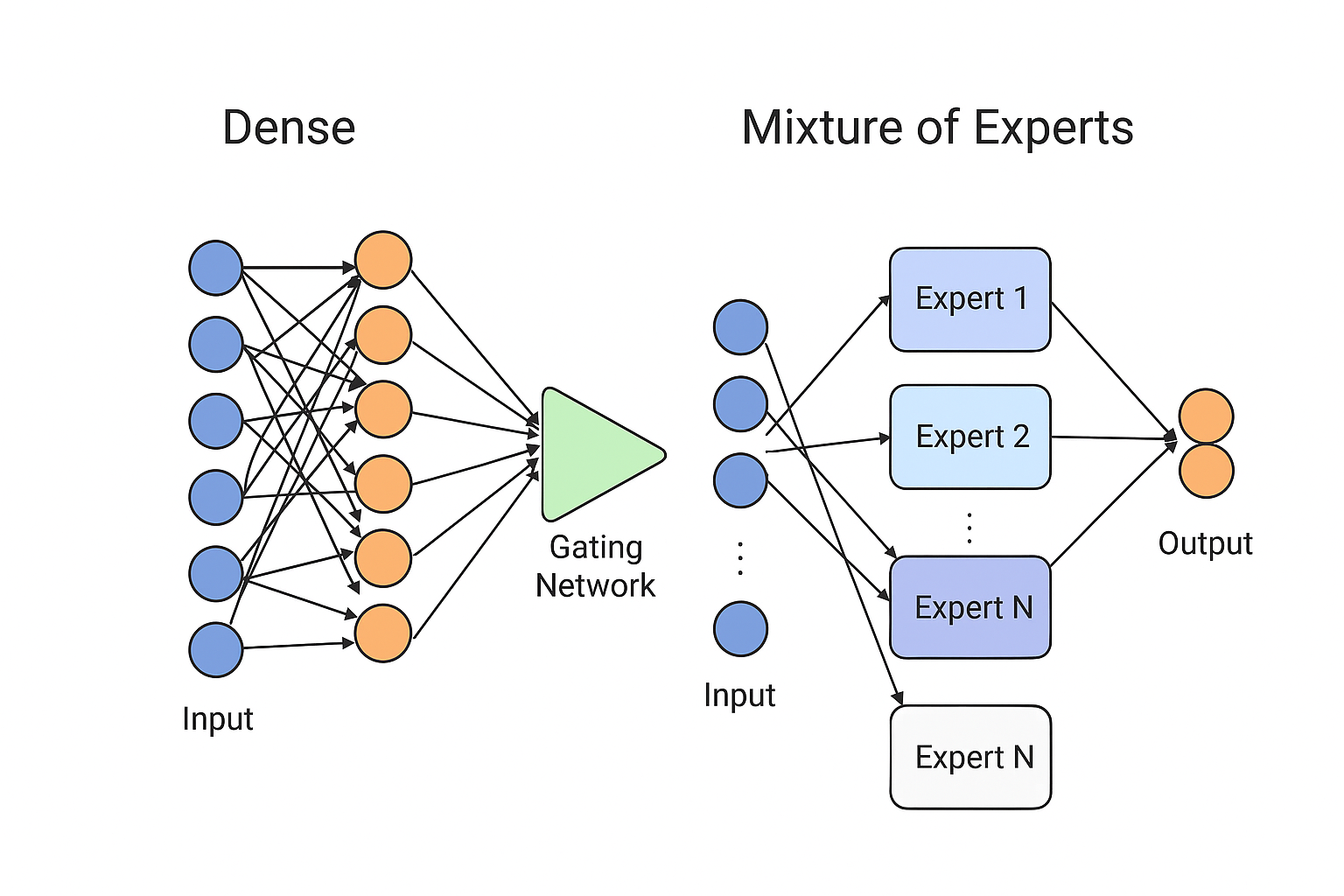

Mixture of Experts (MoE) is a neural network architecture that routes each input to only a subset of “expert” models rather than using the entire model for every computation. A gating network decides which experts to activate, making MoE models highly efficient and scalable because they increase parameter count without increasing compute proportionally. For example, Google’s Switch Transformer, OpenAI’s Gated MoE layers, and Meta’s LLaMA MoE variants all use expert routing to achieve large-model performance with significantly lower computational cost.

Neural Network vs. MoE Comparison